|

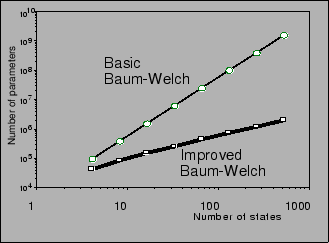

Figure 1 shows the number of word output probabilities versus the number of states for the Ergodic HMM. In this figure, the thin line is the result for the basic Baum-Welch algorithm and the thick line is the result for the improved Baum-Welch algorithm. The figure shows that the latter method greatly reduces the number of word output probabilities. This means that the memory requirements and computational costs have been greatly reduced.