|

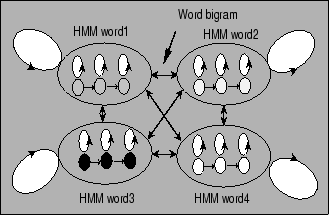

Connecting word HMM models to each other with word bigram probabilities, results in the network becoming a large single Ergodic HMM consisting of multiple states and transition probabilities. This Ergodic HMM is shown in Fig. 1.

The transition probabilities from one word HMM to another word HMM correspond to word bigram probabilities and are estimated from the existing text database. The word HMMs are estimated for a word utterance speech database using the Baum-Welch algorithm.